How to Turn a VTuber Model into an AI Model: 2025 Guide

In 2025, the world of VTubing has evolved beyond simple avatars and motion capture. The next big step? AI VTubers — intelligent virtual personas capable of interacting with audiences, learning from conversations, and performing without human control. But the real question many creators ask is: how do you turn a VTuber model into an AI model?

In this detailed guide, we’ll explore the full process — from understanding what an AI VTuber really is, to converting your existing model into a self-running, AI-powered character. We’ll also show how companies like ARwall are using virtual production and real-time technology to revolutionize digital identity and interactive entertainment.

1. Understanding the Basics: What Is a VTuber Model?

Before diving into AI integration, it’s essential to understand what a VTuber model actually is. A VTuber model is a digital avatar — 2D (Live2D) or 3D (VRM/Blender/Unity-based) — animated in real-time through motion tracking, facial capture, or voice sync software.

If you’re new to VTubing, check out these detailed guides from ARwall:

Each of these articles covers the foundational steps — from designing a model to setting up your streaming environment — all of which are necessary before AI transformation.

2. What Is an AI VTuber?

Are AI VTubers actually AI?

This is one of the most common questions in the VTubing community — and the answer depends on how “AI” is being used.

Many popular AI VTubers are partially AI — meaning that while their dialogue or responses may be generated by artificial intelligence (like ChatGPT or proprietary LLMs), the visual model and movements are still manually designed or semi-automated.

However, true AI VTubers — the next generation — can autonomously:

-

Generate speech in real time using text-to-speech (TTS)

-

Read chat messages and reply dynamically

-

Use facial and emotional AI for reactions

-

Stream and perform 24/7 without human input

In short, AI VTubers are digital personalities powered by machine learning, natural language processing (NLP), and real-time rendering.

ARwall’s real-time virtual production tools have been instrumental in merging human creativity and AI-driven digital characters, bridging entertainment and automation.

3. The Core Process: Turning a VTuber Model into an AI Model

Now that we understand the concept, let’s break down the step-by-step process to turn your VTuber into an AI-powered model.

Step 1: Choose or Create Your VTuber Model

You can either design your own model or start with a premade VTuber model.

Can I use a premade VTuber model?

Yes, absolutely. Many AI VTubers begin with customizable premade models from sites like Booth, VRoid Hub, or Nizima. These models are ready to be rigged and adapted to AI systems with minor adjustments.

ARwall’s virtual production expertise ensures your model can be seamlessly integrated into cinematic environments using LED and XR stages — perfect for creators aiming to build immersive AI-driven shows.

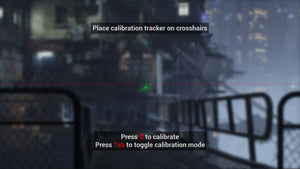

Step 2: Convert the Model into a Usable Format

Your model must be compatible with real-time software engines like Unity or Unreal Engine.

-

For 2D VTubers: Convert Live2D to a format readable by Python/AI scripts.

-

For 3D VTubers: Export your model as a VRM or FBX file and import it into Unity or Unreal.

ARwall uses Unreal Engine for its XR and virtual production systems, allowing hyperrealistic motion and environment rendering — ideal for AI-driven avatars.

Step 3: Integrate an AI Chat Engine

This is where your VTuber becomes intelligent.

You can integrate a language model API (like GPT-based systems, OpenAI’s API, or custom fine-tuned chatbots) that enables your avatar to:

-

Read chat messages

-

Understand user intent

-

Generate natural, context-aware response

Here’s how this step works technically:

-

Use a Python or C# script to connect your model’s dialogue input to the AI API.

-

The AI processes the message and sends a text response.

-

That text is then fed to a Text-to-Speech (TTS) system.

Result: Your VTuber speaks naturally and responds like a real person.

Step 4: Add Voice and Lip Syncing

You’ll need AI-driven voice synthesis (such as ElevenLabs, Coqui, or Azure TTS) to give your avatar a realistic voice.

Combine that with a lip-sync system:

-

For Live2D: Use VTube Studio or PrprLive with API plugins.

-

For 3D: Connect OVRLipSync or RhinoLip in Unity.

This way, your AI’s voice and your model’s facial animation stay perfectly aligned.

Step 5: Implement Emotional AI

A truly engaging AI VTuber reacts emotionally. Emotional AI helps your model understand sentiment and react accordingly:

-

Happy tone → smile, sparkle eyes

-

Sad tone → softer voice, drooping eyes

-

Excited tone → faster gestures

You can integrate facial animation triggers in Unreal Engine or Unity based on sentiment analysis of the AI’s generated text.

At ARwall, we specialize in real-time facial tracking and motion systems, which can be repurposed for AI-driven emotional responses in both digital humans and VTubers.

Step 6: Enable Autonomy and Scheduling

Once you’ve built your AI engine and animation systems, set up automated streaming:

-

Schedule live sessions via Twitch/YouTube API

-

Auto-start OBS with preprogrammed scenes

-

Run AI scripts that monitor chat and respond autonomously

You now have a fully autonomous AI VTuber model — a self-sustaining digital personality capable of streaming, performing, and interacting with fans around the clock.

4. Tools and Technologies You’ll Need

To convert your VTuber into an AI model, you’ll need the following:

|

Category |

Tool/Software |

Purpose |

|

Modeling |

VRoid Studio / Blender / Live2D |

Create or edit your VTuber |

|

Rigging |

Unity / Unreal Engine / VSeeFace |

Animate the model |

|

AI Engine |

OpenAI API / GPT / Kobold / Rasa |

Generate responses |

|

Voice |

ElevenLabs / Coqui / Azure TTS |

AI text-to-speech voice |

|

Lip Sync |

OVRLipSync / VTube Studio |

Mouth movements |

|

Emotion AI |

Affectiva / Sentiment APIs |

Emotional reaction |

|

Streaming |

OBS / VDO.Ninja |

Broadcast setup |

ARwall’s Unreal-based virtual production environment can integrate all these layers — model, voice, lighting, and emotion — to create AI VTubers that feel cinematic and alive.

5. Can AI Turn You into an Anime Character?

Yes — and it’s easier than ever in 2025.

AI tools like LivePortrait, Animaze, and Snapchat’s AI Lens Studio can instantly convert real human footage into anime-style avatars. Combine that with ARwall’s XR technology, and creators can appear inside anime worlds, interacting live through LED or virtual stages.

This fusion of AI, anime, and virtual production is opening a new era for VTubing — where anyone can become their animated alter ego.

6. Advanced AI Integration for VTubers

Turning your VTuber model into an AI model doesn’t stop at basic automation. The next level involves AI cognition, personality design, and adaptive learning. Let’s explore the advanced tools and techniques.

a. Personality Training

A good AI VTuber has a unique “soul.”

You can train your AI model to have a specific personality, backstory, and tone using prompt engineering or fine-tuning methods.

For example:

-

Use OpenAI’s Custom GPTs or fine-tuned LLMs to define your VTuber’s character traits.

-

Add dialogue examples, favorite phrases, and lore.

-

Include boundaries or mood patterns — for instance, your AI VTuber could be cheerful on weekends or sarcastic when trolled.

This results in an avatar that behaves consistently and memorably, making audiences feel like they’re interacting with a real digital being.

b. Memory and Adaptive Learning

AI memory allows your VTuber to recall past conversations and build stronger fan relationships.

Imagine a viewer returning a week later, and your AI VTuber greets them with:

“Hey Luna! You mentioned last time you were preparing for your art contest — how did it go?”

This emotional continuity increases engagement dramatically.

Memory can be stored locally or via APIs such as:

-

Pinecone or Supabase for vector memory

-

LangChain or LlamaIndex for long-term recall

-

Custom databases for personal interaction logs

ARwall’s virtual environments can enhance this interaction further — combining AI-driven performance with cinematic XR backdrops for visual storytelling.

c. Gesture and Emotion Automation

Integrating AI-based gesture generation adds life-like realism to your VTuber model.

Using libraries like OpenPose, DeepMotion, or Radical Motion, you can automatically generate body movements that match your AI’s tone of speech.

Example:

-

When the AI says something happy → add arm waves and smile animations.

-

When the AI gets surprised → trigger a jump or eye sparkle.

ARwall’s virtual production pipeline already leverages motion automation in real-time actors on LED stages — and this same system can be applied to AI VTubers for immersive, human-like movement

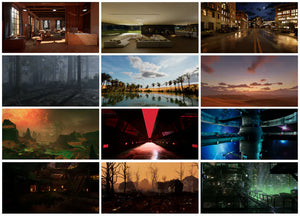

d. AI-driven Visual Environments

Instead of static backgrounds, use AI-generated worlds to place your VTuber in dynamic, evolving scenes.

With Unreal Engine and ARwall’s LED volume stage technology, your AI VTuber can appear in:

-

A futuristic cyber city

-

A cozy anime café

-

A fantasy forest that changes based on audience mood

This turns every AI VTuber stream into a cinematic experience, blurring the line between gaming, storytelling, and entertainment.

Learn more about how ARwall’s LED stages and XR stages redefine virtual performance spaces here.

7. Monetizing Your AI VTuber Model

Once your AI VTuber is ready, it can become a profitable digital brand.

Here’s how AI-driven automation can open up multiple income streams.

a. 24/7 Streaming Channels

AI VTubers can stream continuously, engaging global audiences across time zones.

You can run your avatar around the clock — reacting to chat, playing AI-generated games, or telling procedurally generated stories.

Automated livestreams can generate ad revenue, donations, and subscriptions — without constant human input.

b. Personalized Fan Interactions

With conversational AI, fans can talk directly to your VTuber in private sessions.

This can be turned into a subscription service via:

-

Patreon

-

Discord memberships

-

Virtual meet-and-greet platforms

AI personalization means every fan gets a unique experience — like having a conversation with their favorite virtual star.

c. Merchandise and NFTs

Use your AI VTuber’s persona to sell branded merchandise:

-

AI-generated art prints

-

Animated clips or music videos

-

Digital collectibles or NFTs of special moments

These items can even be AI-customized per fan, making each piece exclusive.

d. Brand Collaborations

Brands are now turning to AI influencers for marketing.

An AI VTuber can represent a company 24/7, host campaigns, and deliver interactive product demos — all while maintaining consistent tone and energy.

This fusion of AI performance and ARwall’s cinematic XR backgrounds can create immersive virtual commercials where brands and AI influencers coexist in stunning digital worlds.

8. The Role of ARwall in the Future of AI VTubers

ARwall, known for its pioneering work in virtual production, XR stages, and LED volume environments, is helping shape the next generation of digital performers — including AI VTubers.

Here’s how ARwall’s technology integrates seamlessly into the AI VTuber workflow:

-

LED Volume Stages:

Create immersive, real-time environments that react to your AI VTuber’s behavior. Imagine your AI avatar standing on a real LED wall, surrounded by dynamic worlds. -

Unreal Engine Virtual Production:

Power your 3D VTuber model with real-time rendering for cinematic visuals. -

ARFX Pro Plugin:

ARwall’s Unreal-based plugin can manage lighting, reflections, and camera tracking — vital for realistic AI-driven characters. -

XR and AR Integration:

Combine augmented reality overlays with AI avatars to create hybrid shows that mix real and virtual presence.

In short, ARwall is building the infrastructure that lets AI VTubers perform like real celebrities — blending artificial intelligence, cinematic quality, and interactivity in one ecosystem.

For deeper insights into ARwall’s work in the VTuber and virtual performance space, explore:

9. Common Questions About AI VTubers

Let’s address some frequently asked questions that often come up when creators explore the idea of turning their VTuber models into AI models.

Are AI VTubers actually AI?

Not all VTubers labeled as “AI” are fully autonomous. Many are hybrids — a human performer supported by AI tools for speech, animation, or moderation.

True AI VTubers are completely powered by artificial intelligence, capable of independent reasoning, emotion simulation, and autonomous streaming.

Is there an AI that turns you into anime?

Yes! AI-based animation tools such as LivePortrait, ReAnime, and Meitu AI Art can instantly turn real people into anime characters using neural style transfer and face tracking.

Combine this with ARwall’s XR environments, and you can quite literally step inside your anime world in real-time.

How to make a VTuber model AI?

To make your VTuber model AI:

-

Build or import a VTuber model.

-

Integrate it with a chatbot or LLM API.

-

Add AI-driven TTS voice and lip-syncing.

-

Use sentiment and emotion detection for natural reactions.

-

Automate streaming and memory for full autonomy.

For detailed setup, revisit the first part of this guide or see ARwall’s step-by-step tutorials on how to make a VTuber.

Can I use a premade VTuber model?

Yes, premade models are widely used and customizable. As long as you have usage rights or a license, you can train an AI personality onto a premade model and modify voice and movement to make it unique.

10. The Future of AI VTubers

The VTubing landscape is rapidly shifting from human-controlled avatars to AI-powered digital entertainers.

With virtual production companies like ARwall merging cinematic XR technology with AI language and animation systems, we’re moving toward an era where:

-

AI VTubers host shows, podcasts, and games autonomously.

-

Fans can “train” or “raise” their favorite digital idols.

-

Real-time, interactive storytelling becomes a mainstream art form.

In other words, the line between creator and creation is blurring — and the future of entertainment lies in this symbiosis of human creativity and AI intelligence.

Bringing Your AI VTuber to Life with ARwall

Turning a VTuber model into an AI model is more than just adding automation — it’s about building a living, evolving character that entertains, connects, and inspires.

With tools like OpenAI, Unreal Engine, and ARwall’s virtual production ecosystem, you can now:

-

Create fully autonomous AI VTubers

-

Stream in cinematic XR stages

-

Build custom personalities and voices

-

Monetize your digital brand globally

Whether you’re an indie creator or a production studio, this is your opportunity to lead the next wave of AI-driven entertainment.

So, are you ready to bring your AI VTuber to life?

Visit ARwall to explore how our LED volume stages, virtual production, and AI-powered visualization tools can help you create the next generation of virtual performers.